So I was curious and got a copy of PhotoScan. I shot a little tiki statue that I have–being carved and wooden, it seemed like the kind of thing @edzacly1 might be working on. I used just ordinary indirect daylight for lighting, at f16 and 1.5 second exposures (obviously with a tripod and remote shutter release). I was using a Nikon D200 with a 50 mm f1.4 lens. I then set my tiki on a Shimpo ceramics turntable under a sheet of white paper with a hole in it for the tiki, and another piece of white paper in the background. Like so:

And so:

I had the camera autofocus, then turned the focus to manual so the focus would be consistent in all photos. I took 38 photos (tiff format) rotating the turntable a little bit at a time.

I then used Gimp (an open source cousin of Photoshop) to mask the photos, removing (almost) all but the tiki and saved the results as tiffs again:

I then imported them into PhotoScan and after playing around with the settings ultimately ended up with an stl file and an obj file. The results I wanted took less than 2 hours of processing in Photoscan on my bottom of the line mac mini–add that to the hour it took me to do the masking. The model that Photoscan made from the photos (and the point cloud derived therefrom) was 2.5 mm tall when I imported it into MeshCam. Unfortunately, MeshCam was able to scale it only about 3x. So I took the stl into Evolve (what I use for my 3D modeling of parts to be milled) and scaled it 64x so that it was about 6-7 inches tall (a little smaller than life size, which is a bit over 8 inches). While I was in Evolve, I had some fun. I think maybe Dr. Jones would approve:

Or maybe more dramatic:

Or just plain wood:

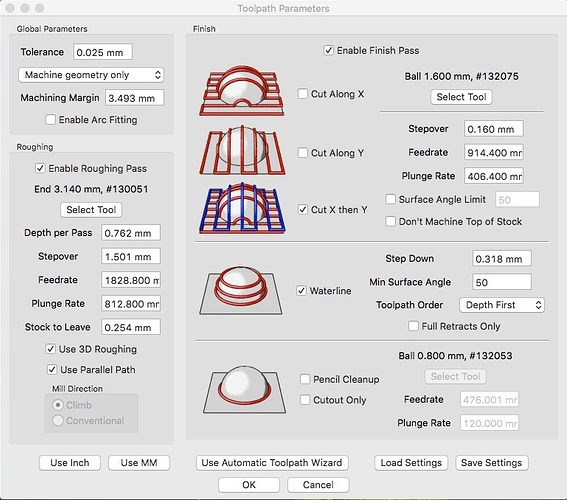

Finally, I tried importing again into MeshCam. I added tabs at the top and bottom, and used the following settings to generate gcode (using 1/8" square and 1/16" ball nose mills):

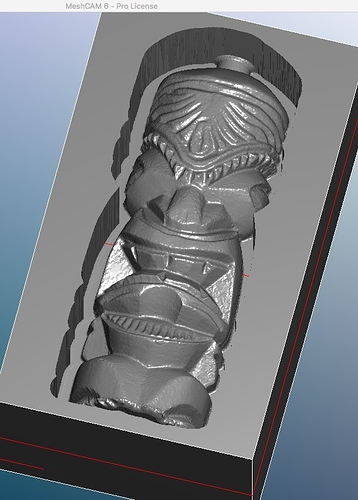

The predicted cutting time is something like 333 minutes. Here’s the simulated first side (I generated only one side):

This is for a blank that’s 101.6 x 177.8 x 60.0 mm (4" x 7" x 2.something"). I haven’t run this on my Nomad yet, but might try in a few weeks when I have a little more time. If anybody wants to have a go, here’s the gcode.

Rough:

tiki pine (4500 rpm) 1 rough.nc (1.0 MB)

Waterline:

tiki pine (4500 rpm) 2 waterline.nc (1.4 MB)

Parallel:

tiki pine (4500 rpm) 3 parallel.nc.zip (2.6 MB)

Note that the last one is zipped. Unfortunately, the original stl from Photoscan was 25 MB, and after scaling in Evolve it was 110 MB. So obviously I can’t post them here.

The proof, of course, is in the milling, which I haven’t done yet. But this seems like a pretty useful way of acquiring models for milling.