The key points:

- scanning produces point-clouds, which get sampled/processed into meshes

- meshes are noisy representations of a surface, from which the computer doesn’t know how to “automagically” make a clean mathematical definition of design intent, even with very expensive software. Even with very expensive software you’re adjusting things by hand and with expert knowledge to get them right.

- noisy surfaces SUCK for doing CNC machining because of the physics issues of cutting things with a spinning tool (instead of burning them with light). namely the torque/moment forces involved, inertia, the stiffness of materials used in construction of the machine, the physical resonance/vibration of the machine, and so on.

- the scan data frequently is very “off” of the target surface. Like as much as ~5% off in commodity scanners (speaking from experience, not advertised specs)

- Scanning would be extremely difficult in a machining environment because unlike lasers which vaporize the removed material, which is then removed by a vacuum system, CNC machines throw the chips everywhere. It is very dusty in a CNC machine, and/or slippery/oily from lubricants/coolants. This makes any non-contact metrology hard.

And now more of the novella answer extrapolating on why things are never as simple as you’d like:

As someone who has done quite a bit with scanning and reconstruction of CAD data from scans, and used to sell the $20k+ scanners (Creaform & Konica Minolta, if you want to know brands) let me tell you this process is NOT easy, nor automatic (not even remotely) even with moderately sophisticated software. Even with very expensive software (RapidForm/Geomagic packages) you’re almost ALWAYS left editing things by hand to get it to what you need.

The problem with scanning is that it can’t easily infer design intent, and without knowing the design intent you’re working with a noisy observation of a thing instead of a clean definition of a thing

In most CAD packages (NURBS/“Solids” modelers) surfaces are defined mathematically and as simply as possible, and not as meshes (how the scan data comes in, if not as a point-cloud). The typical workflow is to “re-fit” your best-guess/best-case definitions of the surfaces over the scan, and then look at your variance between your scanned data and your CAD that you’ve drawn from it, and see if it’s “good enough” for your application. Often, your scan data is crap and very noisy, so you have to make sure your re-model from the mesh is even close to where the actual geometry on the source part was.

As @mbellon said it’s hard to get a part accurate to manufacturing tolerances for what the end customer/user needs. I’d argue it’s hard to even get 0.01" precision on most raw scan data, let alone 0.001". Error adds up quickly in scans, so the larger your part the more it’s going to be off—usually error is quoted for scanning processes as some amount of error per amount scanned. So “0.1mm per 100mm” or something like that.

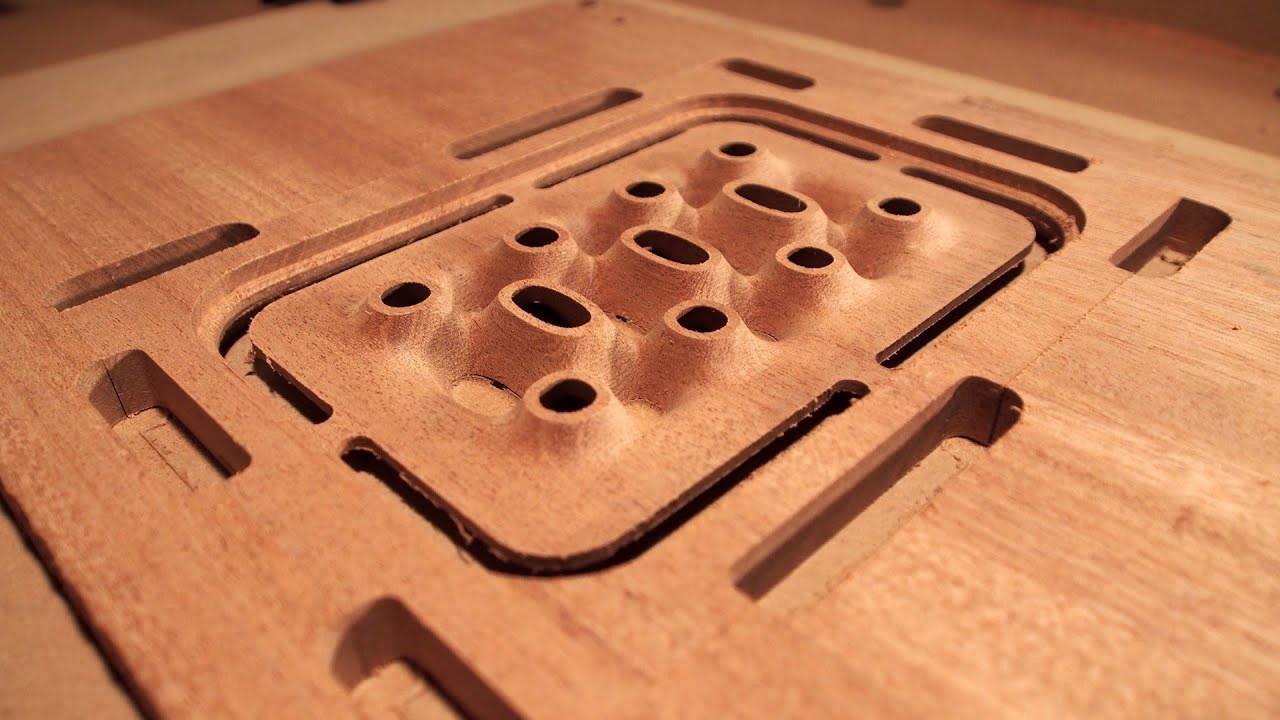

Now, the reasons you need a nice clean, mathematical definition of your surfaces are many, but in particular you’ll discover as you do more CNC just how much motion planning goes into driving the CNC machine around, and how complicated the calculations for the tool-paths can be. This is the “CAM” part of the problem, and it’s very non-trivial already to get the right parameters for high-quality clean cuts on even fairly simply cutting paths that are traveling in clean lines and arcs—to say nothing of complicated jerky movements to follow the surface of a scanned mesh. In driving any physical machine you want to have as smooth a movement as possible, but this is especially true in a CNC machine.

This is because, unlike a laser, you’ve got to deal with a lot of inertia and forces that change rapidly and in a dynamic way as the tool engages more or less material, as it wears, and as the motors are working with and against the machine’s own inertia and stiffness characteristics. With a laser, it’s looking at a 2d picture and driving a known mass (gantry with mirrors & lenses) around and turning the laser on and off—there’s no sudden spike in torque as a tool first starts to cut into fresh material, or sudden surge of too much inertia when it breaks out of the material.

So that means you need clean, smoothly defined surfaces as much as possible for CNC machining, to make smooth tool-paths. You’ll discover as you go to cut lots of small details that require constant path-direction changes that the machine has to go very slowly, because it can’t build up any momentum. It’s constantly having to slow back down to go a different way—which means detailed parts or parts with noisy tool-paths take longer, and they also require greater stiffness in the machine and have a higher likelihood of errors in the final cut part because of the flex in the structure of the machine at each change in tool-engagement force.

Now, with all that said…

I think what you’re really asking for is “could the machine see my stock that I’ve flipped over, and automatically register the machining origin in order to not have misalignment between the ‘top’ and ‘bottom’ sides?”

And that’s a much easier thing to do, but typically it’s done with a contact probe in fancy expensive machines, or the stock is re-zeroed by hand on simpler setups. If you were to do it optically it’d still require some fancy computing and precise optics because the materials you typically machine are shiny, and reflections are very bad for scanning, generally speaking. This is also assuming that you’re going to thoroughly clean out your machine before running such an operation, and make sure there isn’t dust or coolant on the lenses or the stock.

There are people in this forum who have implemented their own cameras for registration on their machines, where they’re using it as an aid to aligning the origin of their CAM tool-paths to the stock corner or other reference point—but it’s still not automagic.

Hopefully that at least provokes some interest in the topics involved to learn more, and gives you a new respect for the technology we already have